(Published June 15, 2023 by Mariven)

The causal and anatomical structure of the brain-body connection, its exploitability, the instrumental origins of causal articulation in interactive systems, and hints towards a theory of AI directability via manipulating internal causal structure.

Much of the motivation behind these ideas comes from my work on Worldspace, a cognitive framework which, among other things, functionalizes the trait (resp. faculty) of intelligence as an

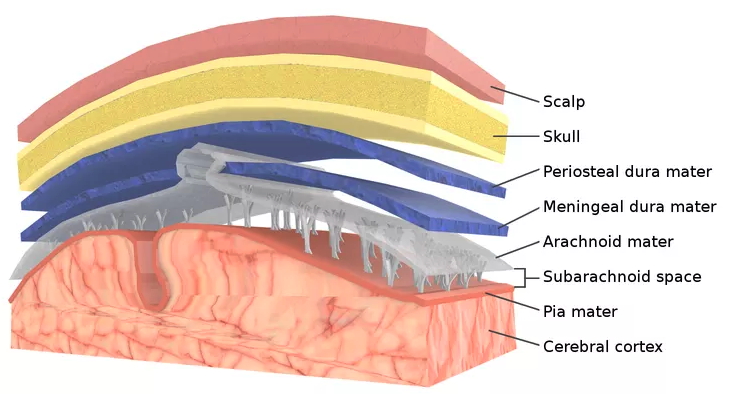

The brain is one of the most causally isolated structures in the human body. It's got a hard outer casing (the cranium), three layers of protective soft tissue under that (meninges), rests in a shock-absorbing fluid that passively supports homeostasis (CSF), and all blood entering it is stringently filtered by the blood-brain barrier.

Why so isolated? You need a stomach to live just the same, and yet the stomach is out fighting bacteria, chemicals, and physical trauma on the front lines while the brain hides in its fortress. But consider how damage affects each:

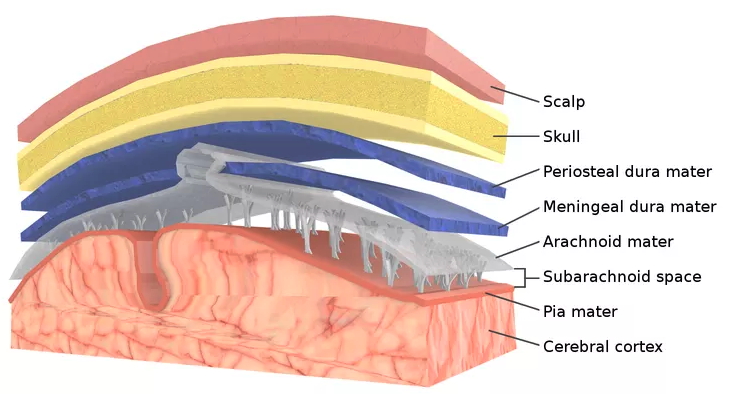

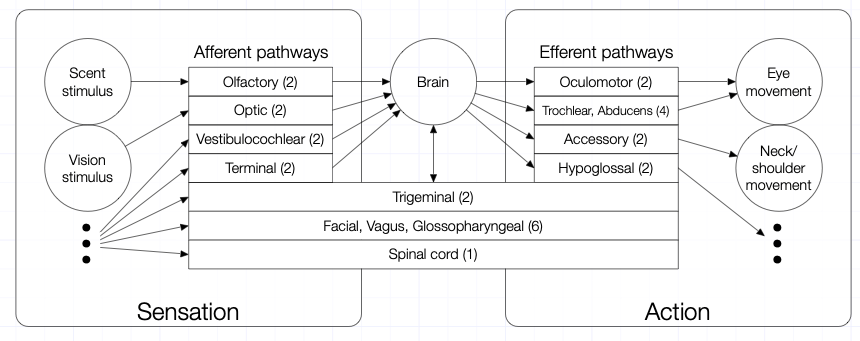

More than isolation, though, the paths of causality to and from the brain are tightly controlled. Ignoring the fluid tracts

The brain is the body's causal articulation organ; it's one of the only systems that can magnify subtle input variations (lights on a screen, spoken words) into tremendous output variations in an adaptive and non-chaotic manner. This makes it all the more vital that the body controls unwanted causal influences, influences not in the brain's "language" of neural signals. But the brain has its own methods of isolating itself as well.

Once a day, it goes to sleep, entering a state where all incoming sensory information is greatly attenuated, and outgoing motor commands that aren't autonomic (breathing, heart rate, etc.) are just blocked. What does the brain experience in this disconnected state? Fantasies, terrors, hopes, and fears; bizarre juxtapositions of past experiences and convolutions of future anticipations not dissimilar to the babbling of a foundational language model.

But these dreams are kept strictly internal. Our actions in our internal worlds don't leak out, and stimuli in the external world hardly

Many sleep disorders are in fact partial failures of this mechanism: it's very tricky to decouple the brain's experience from the body's affordances for sense and movement in a controlled and reversible manner; when the decoupling comes on too strongly, you might end up totally paralyzed before you're fully asleep, hunted down by hypnagogic horrors while unable to move or even scream; when it's too weak, you might end up actually walking or talking while fully asleep.

Pathologies in this mechanism, or more generally in the sleep-wake transition, can tell us a lot about how the causal articulation of the mind-environment interaction works. Anecdote: a couple years ago, I occasionally had false awakenings—episodes where one experiences waking up and going about their morning routine, only to realize that this was a dream, and they're still in bed.

They often happen back-to-back, two or three in a row before you actually wake up—which is a very surreal experience. I would get out of bed, brush my teeth, make cereal, and often have entire conversations, both in person and through text message

Now, in the perhaps thirty second period between my being (falsely) confident that I'm eating cereal and my being (truly?) confident that I'm in bed, I'm experiencing two entirely different world-models. Are they running on different brain structures? Occam's razor says they're almost certainly not: given that the "tech" required to construct the experience of a cohesive external reality already exists in the brain, why would it be reinvented elsewhere? It's much more reasonable to say that the brain's construction of reality is the same whether asleep or awake, and that it's sort of like an ant: when there's no trail for it to pick up on, it just meanders in no particular direction, but, when it happens upon a trail—a causal process that generates a manifold of inputs it's capable of binding into a single coherent, enduring experience—it quickly entrains to that trail; that is its reality.

In general, the brain's causal control is not causal isolation. If I chuck a pebble at someone's head, I do, in fact, manage to affect their brain: the impact is transmitted into nerve signals which go to their brain, where they generate a feeling like 'ow!' and an impetus to turn around and curse at me. But note that the causal influence is not direct: it's always translated to the brain's own language, that of neural signals, in a safe way that cannot physically harm the brain. The body is a filter for real-world inputs, and it's only through this filter that the brain can be affected by the outside world in a way that allows it to model this world. You can chuck a battle axe at someone's head and thereby directly physically influence the state of their brain, but this influence will be illegible—it will be nothing that the brain (which has no nociceptors; it cannot itself feel anything) can understand and intelligently respond to.

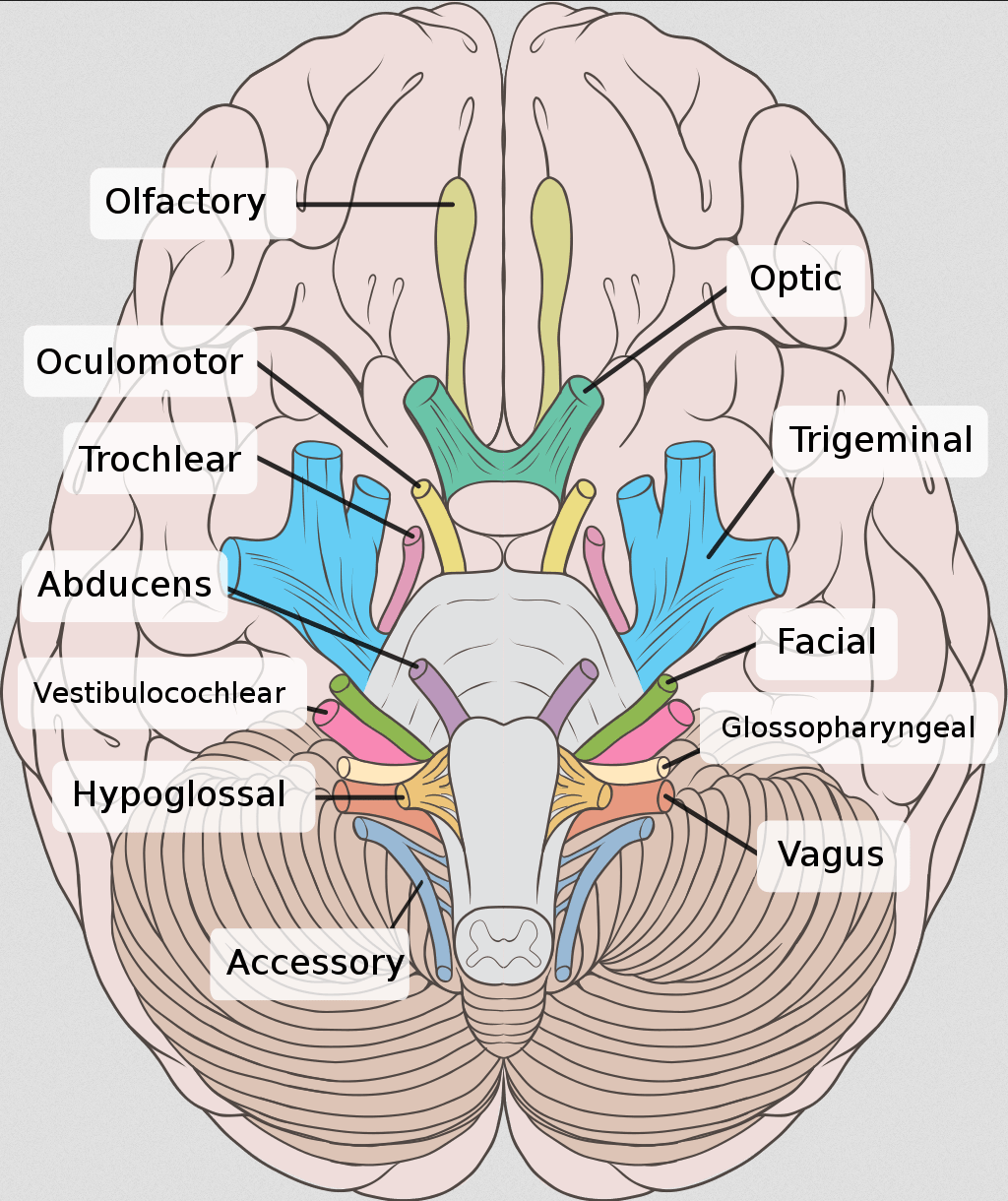

If we were to draw a causal model representing the way in which signals to the brain are safetyproofed, part of it might look like this:

Without cerebrospinal fluid and meninges, for instance, a bumpy car ride might cause your brain to rattle around your skull; with them, your brain is insulated from the direct impact of the jerks and accelerations, only receiving them indirectly through proprioception and tactition. Causal influences which would be dangerous if absorbed directly are turned into harmless

Often, real-life causal models just get arbitrarily complicated as we zoom in on details, and never really split up into discrete sets of causes or effects. But the causal control of the brain admits graphical depiction: ignoring blood and CSF movement

Some terminology: afferent pathways conduct nerve signals that arrive at the brain with sense-information, while efferent pathways conduct nerve signals that exit the brain with motor commands.

All rectangles save the spinal cord are cranial nerves. On each hemisphere, there are:

Finally, by 'spinal cord' I mean the aforementioned dime-sized portion at the end of the medulla oblongata. These are all the places where neural signals can enter or exit the brain; counting them, we find that there are only $(13 \times 2)+1=27$ connections that you have to control if you want to completely isolate the brain from the body and external world.

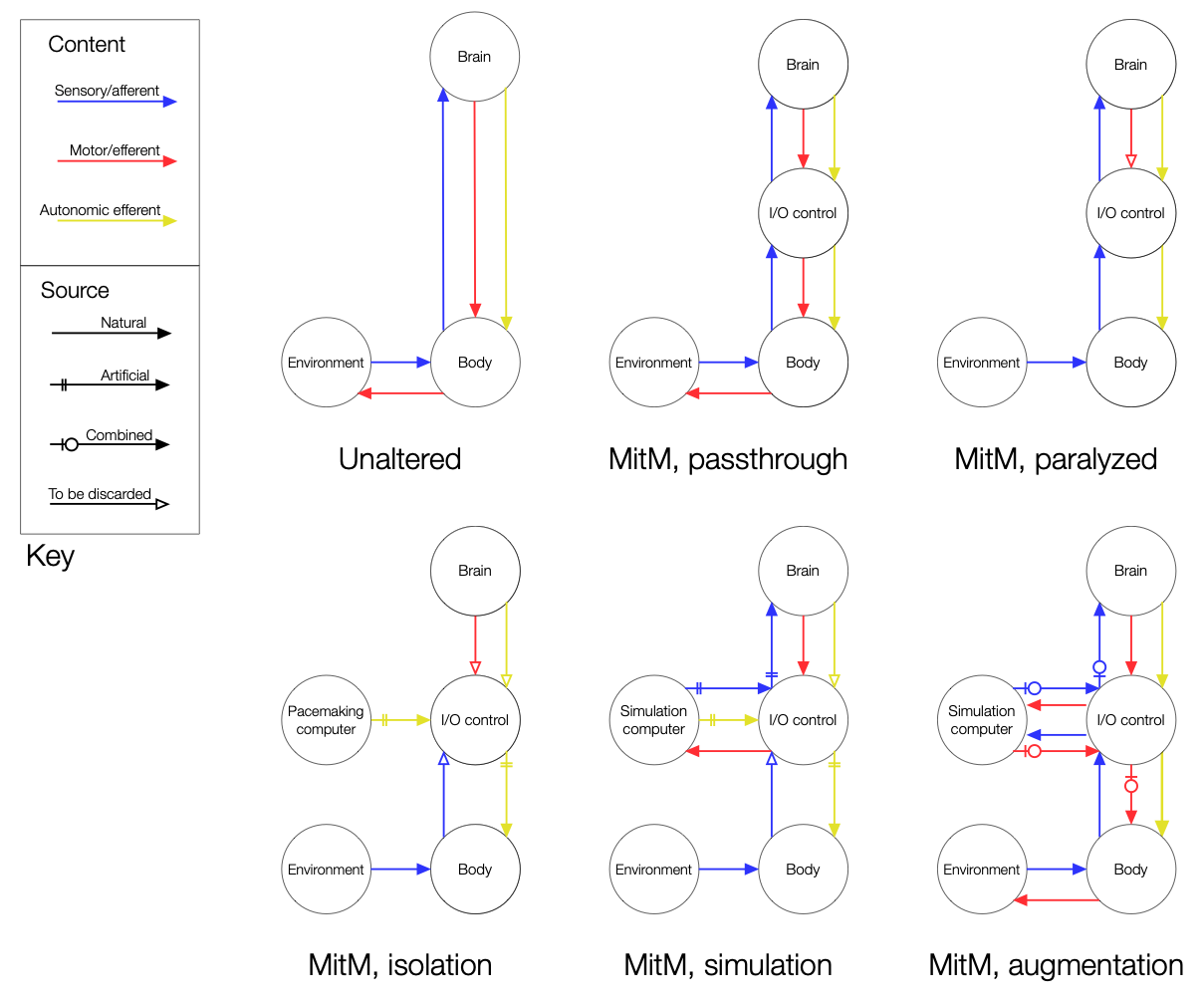

Imagine I perform a surgery on some poor fool in which I insert a thin disc through each of these twenty-seven connections: each disc is capable of inputting and outputting arbitrary neural signals on either of its faces, and the collection is therefore capable of performing a man-in-the-middle (MitM) attack on someone's brain. With such an apparatus, I could make their body perform any action that some motor signals would make it perform, independent of what motor signals they're actually trying to send. I could make them juggle or play the violin as though they had been practicing their whole life, make conversation while maintaining eye contact, or anything else they'd ordinarily be incapable of due to mental factors. Or—were most efferent signals to just be blocked, they'd find themselves paralyzed

At the same time as I'm puppeting the brain's motor output, I can simultaneously puppet its sensory input, making their mind experience any world that some sensory signals would make it experience, independent of what sensory signals the body's actually trying to send. The nice thing to do would be to simply replicate both sensory signals going to their brain and motor/autonomic signals leaving their brain; this "passthrough mode" would be indistinguishable from being unaltered. On the other hand, I could block all signals going either way, using a pacemaking computer to keep the body going; this "isolation mode" would be a perfection of the sleeping state.

But. The real fun starts when I get a computer capable of simulating a world. When it is able to (a) simulate a full world which one can interact with through an avatar, (b) decode all efferent signals into movements of the avatar, and (c) encode the avatar's simulated sensory stimuli into afferent signals, then by blocking all motor signals going to the body

Would you die in real life if you died in the sim? No, of course not. I very strongly doubt that any afferent signals corresponding to actual fatal experiences would be able to manipulate the brain into shutting off, except perhaps for those reverse-engineered to do so—and these will be weird, just-barely-interpretable experiences drawn from the entropic sea rather than perfectly simple, legible things like being shot in the head. This is why images that kill you when you look at them, like this one, look the way they do.

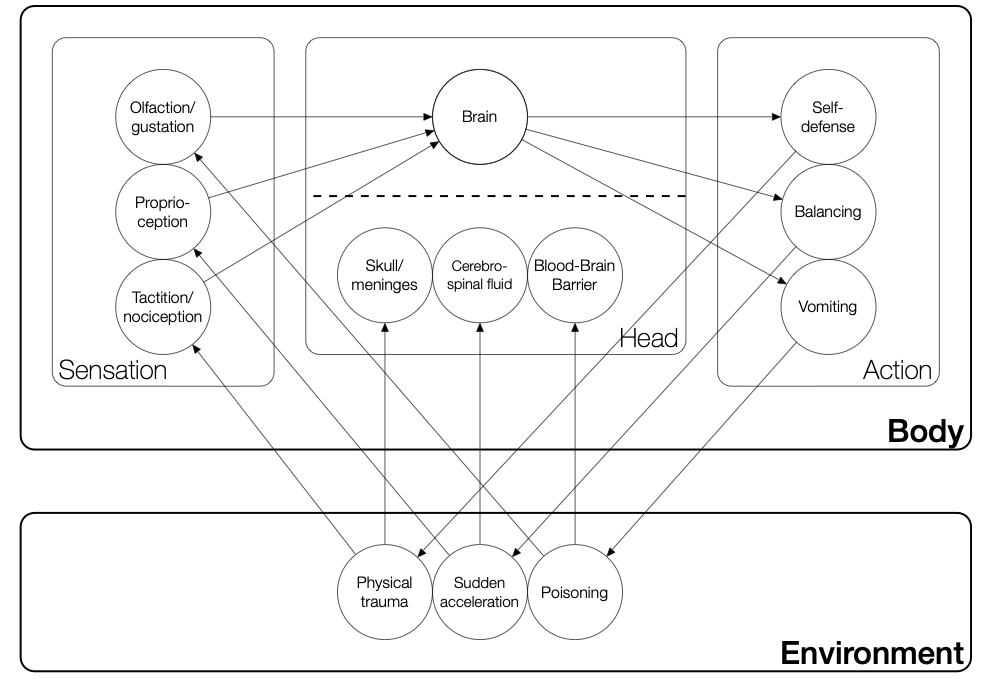

Many of the possibilities represented above are schematically represented as causal networks in the below diagram; the lower right network is a reality-augmentation scheme generalizing the simulation scheme.

The autonomic arrows can be ignored for most purposes; the story of each scheme is given by tracing the afferent (blue, sensory) flows upwards and the efferent (red, motor) flows downwards. In the simulation scheme, for instance, the afferent path tells us that environmental stimuli become sensory signals in the body, but are blocked by our discs ("I/O control"), which replace them with artificial sensory signals computed in a simulated world. The efferent path tells us that the motor actions the brain plans in response to its experience of the simulated world are relayed not to the body but to the computer, as is required to give them control over their avatar.

I suspect that causal articulation is a common property of the kinds of systems that we'd call intelligent, and in such a way so as to make man-in-the-middle attacks like the one depicted above for the human brain possible for general artificial intelligences.

There's a sort of "sensorimotor bounding" criterion that arbitrary optimizing systems must satisfy for us to be able to model them as optimizing systems: they act on the world in a certain way which takes into account the state of the world, and therefore have to have at any given point in time some interfaces through which effects (changes with causal import) flow "into" and "out of" them. Some systems are fuzzy and non-Cartesian enough that we can't get anything out of treating them in this way, but those we care about will be treatable in such a way. You can call globotechnosociocapitalism the thing that'll really kill us, but, however you slice it, it's too big, too fuzzy, too hard to bound. Artificial intelligences, on the other hand, can be bounded, albeit not spatially

Take a DAO, for instance. They're understandable as optimizing systems. But as physical systems? Even if you manage to say anything useful about the ways in which modern computers physically articulate their computations as electromagnetic field changes, there's still the fact that the (say, Ethereum) blockchain is a stochastic jumble of computations carried out by computers throughout the world. Even if you figure that out, you have to deal with the fact that the DAO itself, while governed by its smart contract, is instantiated by countless tiny changes to the state of the Ethereum Virtual Machine, stochastically jumbled across the blockchain itself. To solve the problem once is akin to unscrambling an egg; here, you're trying to unscramble an egg with a cocktail shaker in a house fire. The only good strategy is to rethink your life.

In general, you need to understand a system's semantics in order to perform any sort of sensorimotor bounding, since many of the systems we care about, including optimizing systems, don't have any meaningful physical instantiation. Obviously, since we are physical, anything that can actually affect us must do so through its physical effects, but many of the everyday systems we reason about with implementation-agnostic: they have the same qualitative behavior regardless of how they're physically instantiated. If you wire me a million dollars, all you've physically done is shuffle a few electromagnetic signals in some servers; but you'd never be able to pinpoint the changes in the ${\bf E}$ and ${\bf B}$ fields—and, in fact, you shouldn't be able to, because a wire transfer should be agnostic as to which banks we use, where the servers are located, whether they use SSDs or HDDs, or all the other things which massively change the physical instantiation of your action. This physical illegibility and indeterminacy of the transfer are kept under control by the semantics of our computational and banking systems, which allow me to shuffle more electromagnetic signals in a way that ends up producing very clear physical events, like the sudden migration of tens of thousands of beanie babies to a single apartment in the United States.

Any AGI, insofar as it senses and acts on a world, constructs its sensations in and dispatches its actions on an internal representation of the world. Attempts to understand what this representation directly says about the world may not prove successful, but, by understanding how to locate and bound any such representation, we can modify and safetyproof its relation to our reality. For instance, there's no necessary reason that the AGI ought to locate whatever it calls utility in our reality; if merely dreaming of utility is good enough for it, with us modifying its dreams so it ends up thinking solutions to our problems, that may be good enough for us. If it thinks about and acts on controlled realities, whether our simulated worlds or its dream worlds, and if it places its notions of self, sense, action, environment, and utility entirely in these realities, we can control it without even having to restrict it.

The original essay was meant to be short and simple; these are parts I wrote, but cut from it. Instrumental Causality discusses the manner in which causal articulation arises out of necessity (or instrumentality) in optimizing systems, and how we are to understand and model it; Cartesian Confusion discusses the manners in which we come to believe we're directly observing and acting on reality (and therefore think ourselves to be "above" sensorimotor bounding).

A dialogue on the a priori origins of causal articulation in physical systems.

Alice

How do you protect a physical thing from the outside world?

Bob

Rephrase the question. Internal/external influence breaks down when you consider temperature, entropy, decay, and so on; what we can feasibly want is to keep an adversarial outside world from altering a physical thing in a way that we don't want. Because (a) we do not know what an adversarial agent might attempt, (b) most things we want to protect are in certain organized, or low-entropy, states that make them valuable in the first place, and (c) even random changes to a low-entropy state will almost always raise its entropy, making it less valuable, we ought to close off all relevant causal channels, putting emphasis on each in proportion to the extent to which that channel can effect the kinds of changes to the object that we count as a raising of entropy. If the low-entropy object is a brain, then we want to protect it from the things that can easily shake it up, destroy its information—chemical, physical, biological attacks—rather than from radio waves or magnetic fields, which, at the energy scales made 'canonical' by the natural environment, don't really alter the brain. (It's not impossible, see TMS, but it just doesn't really happen).

Alice

But if you cut it off from the world, what's the point of having it? Aside from sentimentality, we usually protect things because they're useful to us, which requires our keeping them open to interaction. So, take the thing in question and suppose it ought to be able to interact with the outside world—that's where it derives its use. How do you alter your protections to accommodate this?

Bob

Create controlled interfaces between the thing and the outside world. Find the smallest possible causal channels required to convey whatever effect the inside thing ought to be having on the outside thing, find the ways to implement them that interfere the least with the existing isolation mechanisms, and guard them very carefully to make sure nothing passes through them that isn't of their causal type.

Alice

There are three unstated assumptions that threaten this approach, as you've laid it out:

Bob

I don't see how this logic of partial accommodation is anything but a random buildup of congruences. You've pointed out that humans talk and eat through the same hole, but this is just a quirk of human anatomy. That we use the same physical structures for talking and eating is a random result of the fact that those structures were already in place, serving some other purpose, when the need to talk arose. Our mouths and throats are an accident of evolution.

Alice

That's exactly what I mean. Those biological structures that were already in place for eating lended themselves to co-optation for a new purpose which, while subjectively independent, could be achieved through shared objective means. Necessity, then opportunism.

It's as though you have a ring $R$ of causal effects, and a subset $S$ chosen subjectively, independently of the objective ring structure, representing those effects you want to allow. If you want to reify $S$, you need to deal with the fact that any physical structures you create will be usable in ways not dependent on $S$, because $S$ isn't a property of the physical world—it's a property of the way you've chosen to look at the world. The reification, as another subset of $R$, will consist of all possible ways that the physical structures necessary in the creation of $S$ could be used. This objectification will look like the ideal generated by $S$, $\langle S\rangle$.

The simplest case is that $R$ is a principal ideal domain: if your entire universe is sending emails to addresses, and you want to develop the ability to send five particular emails to five particular addresses, then you'll need to create the ability to send arbitrary emails to arbitrary addresses. For general $R$, things will be far more complicated, but you'll always find that the ideal is articulated by common denominators among the elements of $S$.

Now, how do you implement your protections for our thing in such a way so as to allow it to interact with the world in some particular ways?

Bob

I guess... we have to find common sets of underlying physical correlates for our causal channels and implement guarding mechanisms on this physical level? Trying to work on the physical level directly seems much more dangerous, though: we can never work out all the macro-level phenomena that come from atomic movements, and generally only see what our subjective motives guide us to see.

To use the ideal metaphor, we never really see the whole ideal $\langle S\rangle$. We have our own subjective frameworks, and don't really have the motivation, creativity, or combinatorial capacity to conceive of all the ways that our constructions can be combined. Basic computers could've been created with the telegraph relays available in the early 19th century; the ancient Chinese used heated air to float balloons for signaling purposes, but never scaled up to human flight.

An adversary that conceptualizes subjective causal channels in a slightly different manner, whether due to different motives or a different style of cognition, could see an entirely new angle to the same objective reality—could exploit parts of $\langle S\rangle$ that we'd never see. So, by trying to work at the level of physical reality, aren't we playing a much less certain game?

Alice

You're playing the exact same game: the exploits your causal channels allow don't go away upon your reframing the situation. Thinking of physical realities just lets you see how uncertain this game is. The moment we sought to both protect a thing and allow the outside world to interface with it, we let this uncertainty enter; these goals are in conflict, and this is the resulting tension.

Our subjectivity will always be our greatest vulnerability: we think of developing methods for controlling interactions, but even the notion of an "interaction" is a mental construct, a subjective fabrication on top of reality, and, like most such constructs, it breaks when we put too much weight on it. An artificial superintelligence may be able to keep track of statistical ensembles of particles in a way that allows them to formulate and place guarantees on the behavior of all macro-level phenomena

Bob

Should we not then defy the question itself, saying that it just isn't right to talk about taking on causal articulation as a goal?

Alice

Evolution did not decide to causally articulate the brain. Don't think of it as a goal that one consciously has in mind. I'm emphasizing what naturally happens because I want you to think of causal articulation as an instrumental correlate. Arbitrary goals in the real world often end up having us protect some Macguffin while simultaneously keep it open for interaction, and causal articulation strategies are created to this end; because they're generally not conceived of as such, they end up evolving in the manner I described: messy, overlapping, and mixed up.

Bob

Evolution doesn't have a mental construct of interaction. So why does its causal articulation control interactions?

Alice

The notions of causal articulation and interaction exist within us, in application to things, whether we're directly instantiating them or merely referencing them. If they were puppets, you'd be manipulating both; and, after making one push the other, you'd be asking me why it did that.

Bob

But don't we have mathematical proofs about thi—

Alice

No! You have mathematical proofs about conceptual constructs. Whenever you apply them to reality it will always be by assuming that reality is in a certain way, that this aspect of reality is not only clearly delineable but identifiable with this mathematical object, that this aspect of reality is genuinely coherent to speak about as a numerical parameter, and so on and so on.

Bob

Then why does mathematics seem to apply to the world so well?

Alice

Because when we can't mathematically model a situation, we don't use mathematical models. There is something about reality which allows mathematical structure to appear in a sort of fuzzy, fractal way even at the level of macroscopic conceptual delineations, that is clear, but it does not approach the level of axiomatizability. What mathematics we do see working is either the product of engineering, which does not admit axioms and proofs, computer science and statistics, which do in fact suffer from the fact that we cannot apply its proofs directly to reality, or physics, which does sometimes seem to provide true mathematical limits on reality by virtue of limiting possible experience, but pretty much arrives at these in a haphazard heuristic way, mathematicians trailing behind in an attempt to construct axioms that manage to prove what the physicists already know

(end dialogue here before we get too off track)

The schema of causality lends a deep sense to our understanding of interactions between systems. Natural selection, for instance, is a fundamentally aleatoric force whose creation of complex modular systems such as ourselves seems almost absurd at first—but, when its designs are understood as abstract structurings of causal flows within an environment (e.g., we think of "the" human, "the" mouth -- not any particular human, any actual mouth, but abstract designs giving rise to abstract environmental interactions such as "eating", "talking"), we can understand why these designs turn out the way they do. We can make sense of the ubiquitous multicausality of our organs this way: mutation pressure is simply taking what is given to it and marginally adjusting it, and, when these adjustments happen to open up entirely new causal flows (e.g., when the breathing and eating apparatus becomes useful for 'vocalizing'), natural selection rapidly pursues the newly available fitness gradients.

Another area in which the schema of causality makes such deep sense of things is in the design of technological interfaces. In cognizing interactions between abstract systems, we can think about what causal flows are inherent in a given goal: if there's some API which has to permit access to medical records on a remote server to users with the right credentials, for instance, then it's easy to see that the goal necessarily requires the remote server to be open to arbitrary data inputs from arbitrary computers; given how networks work, this causal channel must be opened for the goal to be possible at all. The very fact that it parses credentials at all tells us about what causal flows must necessarily be allowed to pass through this channel: contingent on an input having some structure that any possible valid request could have (e.g., it encodes a well-formatted JSON request from a non-blacklisted host)

There is a largely mathematizable structure to interaction which is made visible to us through the schema of causality -- the lens of causal channels, causal flows

Note that there does seem to be plenty of work on this problem, related to certain causal approaches to quantum gravity (see e.g. this), but I'm not familiar enough with it to have an opinion.

There are two related illusions conflated by this single word. The first is dualistic—that one can be cleanly divided from the world, rather than being embedded into it. The second is agentic—that one receives observations directly from the world, that one chooses actions to directly make upon the world. Both of these arise from the illusion of a self, projected by humans not just onto ourselves but onto the conceptual fabrications we call 'systems'. When we reify, say, evolution, we imagine it as a intelligent actor—an eye separate from what it sees, a hand separate from what it manipulates. When we think of this actor using the same fundamental schemas with which we think of ourselves and others (e.g., as 'wanting' certain outcomes), we imagine it seeing and acting on a reality external to itself. For now, though, it'll be the second illusion I'm concerned with here, and the way both aspects of it (the non-Cartesian nature of both perception and action) are made clear by the causal articulation of the brain.

When I see a yardstick, a clock, or any other physical object while walking around my bedroom during a false awakening, where is that object located? Even if my dream is correct about the object's location and appearance within my room, the object that appears in my dream can only physically be in my brain. But my brain isn't even a yard long—so in what sense can it contain the yardstick I see? It can only be in the sense that the yardstick is, as an experienced object, the product of neural activity. It's like -- if I'm painting a bowl of grapes that sits in front of me, then, no matter how realistic my painting, it is still fundamentally not the bowl of grapes itself, just a certain pigmenting of my canvas which I've managed to couple very finely to the appearance of the actual bowl of grapes

If I truly wake up, and see the yardstick right after getting out of bed, then what the object is for me has not changed; given the way false awakenings chain together, I can't even justifiably say whether it's real or not. What has changed is that the experienced yardstick is now coupled by my perceptive apparatus to the physical yardstick—but the fact of this coupling is not immediately deducible from the experience being coupled. It is defeasibly deducible in general from the hidden coherences reality has (I won't explain here), but this is neither immediate nor applicable to particular cases.

This is all to make the obvious point that the world as you experience it is necessarily in your head, and whether it's "real" or not consists only in whether the perceptive process serves to predictably couple it to the physical facts. You only ever interact with the canvas, but there happens to be a system which, when you paint an arm reaching for the painted grapes, reaches a real arm for the real grapes

If our subject tries to pick up a ball on the ground but doesn't feel their arms moving concurrent with their sending the neural signals that move their arms, they might notice something's up! The easiest and most natural solution to this is to just let them control their simulated avatar as they'd control their real body. But in principle, our ability to prevent our subject from realizing something's off depends only on what they sense, not their actions. If we rig the environment such that they only ever decide to make actions which they expect to induce sensations that happen to correspond to the sensations we're going to provide, we don't actually have to take their actions into account at all. But this strategy requires us to 'read their minds' to an extent: since the causal network looks like $sensation \to brain \to action$, even full knowledge of P(action | brain, sensation) won't allow you to compute P(action | sensation) unless you also know their actual brain state or have full knowledge of P(brain | sensation) as well. (The latter is possible for very powerful sensations: if you give them the sensation of suddenly being stabbed in the eye, then probably nothing will go wrong if you throw away their next actions and just decide to give them the sensation of bringing their hands up to their face and screaming).

But the entire point of the experiment is to demonstrate how we can isolate the brain from the body and environment, so let's follow a "cerebral sovereignty" principle, rejecting strategies that require us to guide the brain into specific states, and a "cerebral privacy" principle, rejecting strategies that require us to gather direct knowledge about brain states. It still follows that movement can generate the percept of action, and action can generate the percept of movement, without any actual relation between them.

The next time you're sitting somewhere and find that your leg's gone into a deep enough sleep to render your foot immobile, consider trying really really hard to move it. From my own experience, this just won't work—the leg wakes up on its own terms—but there is definitely something it is like to send that motor signal: it's like holding down a mental button, or lever, which sort of impels one's experienced body to be in a certain way. This often comes with a slight feeling that the foot is in fact moving, but you can visually confirm that it's perfectly still. When the leg wakes up again, it's nearly impossible to feel this mental lever.

I would use an analogy: I've played enough Playstation games to be instinctively familiar with the layout of a Playstation controller. So when playing a new Playstation game, I can start learning the controls the moment I pick up the controller, and from then on my actions won't parse as "$\bigcirc$, $\sf{R1}$, $\square, {\sf{R2}...}, \triangle, {\sf{R3}}$" but as "heavy attack, block, light attack, charge up special, kick, activate special"

The brunt of the analogy is this: my ability to send specific motor signals to specific parts of my body is like a Playstation controller I've been using since the day I was born. Even though the mental buttons are there in some sense, I'm never conscious of pressing them, just of the consequent movements. And if I try to isolate the experience of pressing the button without doing anything about the movements, I'll just end up confusing it with something else like a constructed kinesthetic extrapolation. But if an external factor can render a button unresponsive (in this case, a leg falling asleep), and I can do the thing that corresponds to mashing that button (trying really really hard to move my foot), I have the opportunity to recognize that there's a button here that I'm mashing.

(In general, the brain does a certain thing that's like... extrapolative development

Going back to the topic of misleading someone as to the body they're controlling, this gives us a perspective more compatible with the physical facts: it's as simple as unplugging their mental controller and plugging it into a port of your own creation.